We have been testing voice agents for the past 2 years. For a long time, the verdict was always the same: “Impressive, but not ready for a real customer.” That changed in late 2025.

We have extensively tested the latest stack and the difference is night and day. Here is why voice agents are finally ready for widespread adoption in 2026.

1. The Race to Zero Latency: Native vs. Modular

The awkward three-second pause that used to define voice AI is gone. In 2026, we are seeing “end-to-end” latency drop below 300ms, effectively matching human reaction speeds. This has been achieved through two converging technical breakthroughs:

The Native Audio Revolution

Models like OpenAI’s GPT-4o Realtime and Google’s Gemini 2.0 Flash have moved beyond the old “transcoding” pipeline (Speech-to-Text → Text → Text-to-Speech). These models process audio natively. They hear tone, intonation, and speed directly, and respond instantly. This “multimodal” approach eliminates the processing overhead of converting speech to text and back again.

The Hyper-Optimized Modular Stack

For developers who prefer control over every component, the modular “pipeline” has also become blazing fast. The hero here is Cartesia’s Sonic-3. Released in late 2025, Sonic-3 is a voice generation engine that achieves a staggering 90ms latency.

By combining ultra-fast inference engines (like Groq) with Sonic-3’s instant voice rendering, businesses can now build custom, modular agents that are just as fast as the native giants, but with more control over the specific voice and logic.

2. Platforms Are Now “Orchestration Brains”

In the past, building a voice agent meant stitching together APIs and hoping they held together. Now, platforms like Vapi and ElevenLabs have evolved into robust orchestration layers that handle the chaos of real-time telephony.

Vapi has become the infrastructure backbone. There is no lock-in with any provider: you can assemble your pipeline and workflow like lego bricks, swapping models, voices, and transcribers at will. It handles phone line jitter, “barge-in” (when a user interrupts the AI), and routes audio streams automatically.

ElevenLabs has moved beyond just “great voices” to a full Conversational AI stack. Their Agent Workflows allow agents to retain context over long conversations and handle complex logic without needing a separate brain.

3. MCP: The “USB-C” for Enterprise Knowledge

Perhaps the most significant breakthrough for 2026 is the widespread adoption of the Model Context Protocol (MCP).

Until recently, connecting a voice agent to your internal data (CRM, Inventory, Notion) was a bespoke, fragile integration nightmare. MCP changed that. It provides a standardized way for AI to connect to data sources securely.

Universal Connection: Your voice agent doesn’t just have general knowledge; it has your knowledge. When a customer asks “Is my order ready?”, the agent queries your internal SQL database via an MCP server and answers immediately.

The Power of Reusability: This is the game changer. Once we build an MCP server for your specific business logic, it can be reused across all your AI agents. Your Voice Agent, your Website Chatbot, and your Internal Slack Assistant all share the exact same tools and data access. Build once, deploy everywhere.

4. Hyper-Realism and Emotional Intelligence

The robotic monotone is extinct. The latest generation of models possesses “Speech-to-Speech” capability, meaning they understand and replicate nuance.

Cartesia Sonic-3 has introduced fine-grained emotional control. We can now direct the agent to “sigh,” “laugh,” or speak with “urgency” using simple tags. If a customer sounds frustrated, the AI detects the prosody and adjusts its response to be more empathetic, automatically. (Cartesia Sonic)

ElevenLabs v3 Updates: ElevenLabs has redefined expectations with their v3 update, giving us control over the performance via Audio Tags, directing an agent to [whisper] a secret or [pause] for effect. (ElevenLabs v3)

Global Parity: High-quality voices are no longer exclusive to English. French and even Dutch models are now getting close to English quality, opening the door to global deployments.

We’ve tested these agents with our customers across various scenarios. The feedback has been overwhelmingly positive, and teams report a huge time gain by offloading routine calls to the AI.

Practical Applications for 2026

The technology is no longer the bottleneck. The opportunity now lies in application.

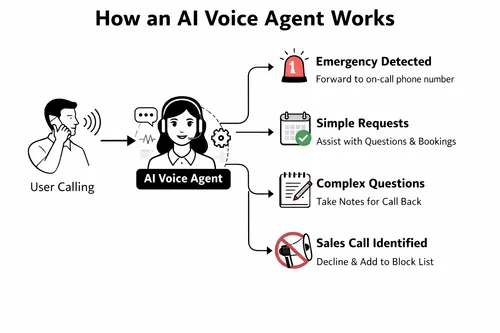

The End of Voicemail (The AI Receptionist): This is the most immediate ROI for most businesses. An AI receptionist can operate in two modes:

- After-Hours Guardian: Instead of a generic voicemail, the agent picks up at 6 PM, answers questions, and books appointments directly into your calendar.

- Full-Time Front Desk: For high-volume businesses, it handles 100% of initial calls, filtering spam and handling routine FAQs so your humans only talk to qualified leads.

Other use cases: Outbound lead qualification, appointment scheduling, and voice-activated knowledge bases for field technicians.

Conclusion

The technology stack for voice AI has matured. 2026 will not be about waiting for the tech to get better; it will be about the creativity businesses apply to these tools. The latency is gone, the voices are real, and the data connections are standard.

At Flowful.ai, we can help you build custom voice agents and integrate your data via MCP.